Introduction

As AI tools like ChatGPT, Claude, and Gemini become embedded in everyday life, a once-niche skill is now essential: prompt engineering. It has rapidly become necessary for developers, educators, creators, and even casual users.

This article explores how Prompt Engineering is evolving in 2025, outlines practical techniques for better prompts, highlights new tools, and examines the emerging frontiers of this fast-changing field.

1. What is Prompt Engineering?

Prompt Engineering is the process of crafting effective inputs (prompts) to large language models (LLMs) in order to get accurate, creative, or context-aware outputs. It’s both a technical practice and a communication skill.

Why It Matters

- Maximizes Model Performance: Better prompts = better results.

- Bridges Human-AI Interaction: Prompts act as the main interface between people and AI systems.

- Drives Real-World Use Cases: From writing assistants to data analysis, prompt design powers a wide range of applications.

2. Core Techniques in Prompt Engineering

2.1 Basic Techniques

-

Zero-Shot Prompting No examples given. Example: “Summarize the following article in three sentences.”

-

Few-Shot Prompting Provides a few examples to guide the response. Example:

Translate the following to French:

Hello, how are you? → Bonjour, comment ça va ?

What is your name? → Quel est votre nom ?

- Role Prompting Assigns a role or persona to influence tone or behavior. Example: “You are a helpful customer support agent. Respond to this query…”

2.2 More Advanced Techniques

-

Chain-of-Thought Prompting Encourages the model to show its reasoning process. Example: “Explain your steps before giving the final answer.”

-

Output Constraints Guides formatting and structure. Example: “Summarize in 10 bullet points.”

-

Self-Consistency & Self-Checking Run multiple responses and pick the most consistent (self-consistency), or have the model critique its own output (self-checking).

-

Template Prompting Use structured templates for repeatable tasks, like report generation or summaries.

2.3 Emerging Areas

-

Multimodal Prompting Combination of text, images, video, or sound to get richer results. Example: “Create a 10-second video of a cat chasing a laser in a living room.”

-

Emotionally Intelligent Prompting Prompts designed to evoke empathetic, context-sensitive responses. Useful in mental health apps and support services.

-

Recursive Prompting and Meta-Prompting Refining prompts over multiple rounds or using prompts to generate better prompts.

2.4 Practical Tips for Personal Use

Even simple tasks like brainstorming, writing, and summarising benefit from good prompts.

-

Be Clear and Specific Example: “Provide a basic overview of the article in two sentences.”

-

Provide Context Example: “List three habits that might help you based on what you wrote in your journal.”

-

Use Instructional Verbs Example: “Provide five ideas for dinners that cost little to make.”

-

Set Output Constraints Example: “Write no more than 15 words for your motivational quote.”

-

Break Complex Tasks into Steps Example:

- “Summarize this blog post.”

-

“Suggest three follow-up questions.”

-

Iterate and Refine If a response isn’t ideal, rework your prompt and try again.

-

Use Self-Checking Example: “Write a summary, then review it for clarity.”

-

Avoid Overloading One clear request is better than many mixed ones.

3. Tools and Platforms

Several tools now support structured prompt engineering:

- LangChain – Build chains of prompts and tools for complex workflows.

- PromptLayer – Track, version, and analyze prompt performance.

- OpenAI Playground / Anthropic Console / Google Vertex AI – Visual interfaces for quick testing.

- PromptBase – A marketplace for buying and selling prompt templates.

- Orq.ai – Supports multimodal input, versioning, and team collaboration.

- Mirascope – Offers real-time feedback and optimization.

4. Emerging Frontiers and Future Trends

4.1 What’s New and Underexplored

-

Multimodal and Non-Text Prompting Expands prompting to visuals, audio, and sensor data.

-

Prompt Security and Robustness Techniques like context isolation and input validation prevent injection attacks or unwanted behavior.

-

Customization and Personalization AI prompts adapt based on previous interactions or user profiles.

-

Automated Prompt Optimization AI now helps write better prompts, evaluate their quality, and optimize them automatically.

-

Human-AI Co-Creation AI can suggest drafts while humans guide or refine them.

-

Domain-Specific Prompting Different industries (medicine, law, science) require field-aware prompt strategies.

-

Low-Code / No-Code Tools Platforms simplify prompt creation for non-technical users.

-

Multi-Turn and Long-Form Prompting Managing long conversations or memory-aware tasks is a growing area.

-

Reverse Engineering Prompts Analyzing successful prompts to understand what works and why.

4.2 What’s Ahead

-

Generative AI-Assisted Prompting Tools that suggest better prompts on the fly.

-

Adaptive, Interactive Prompts Dynamic prompts that evolve during a conversation.

-

Ethical and Fair Prompting Increasing focus on reducing bias and improving transparency.

-

Industry Standards and Best Practices Emerging frameworks to guide consistent, safe prompt engineering.

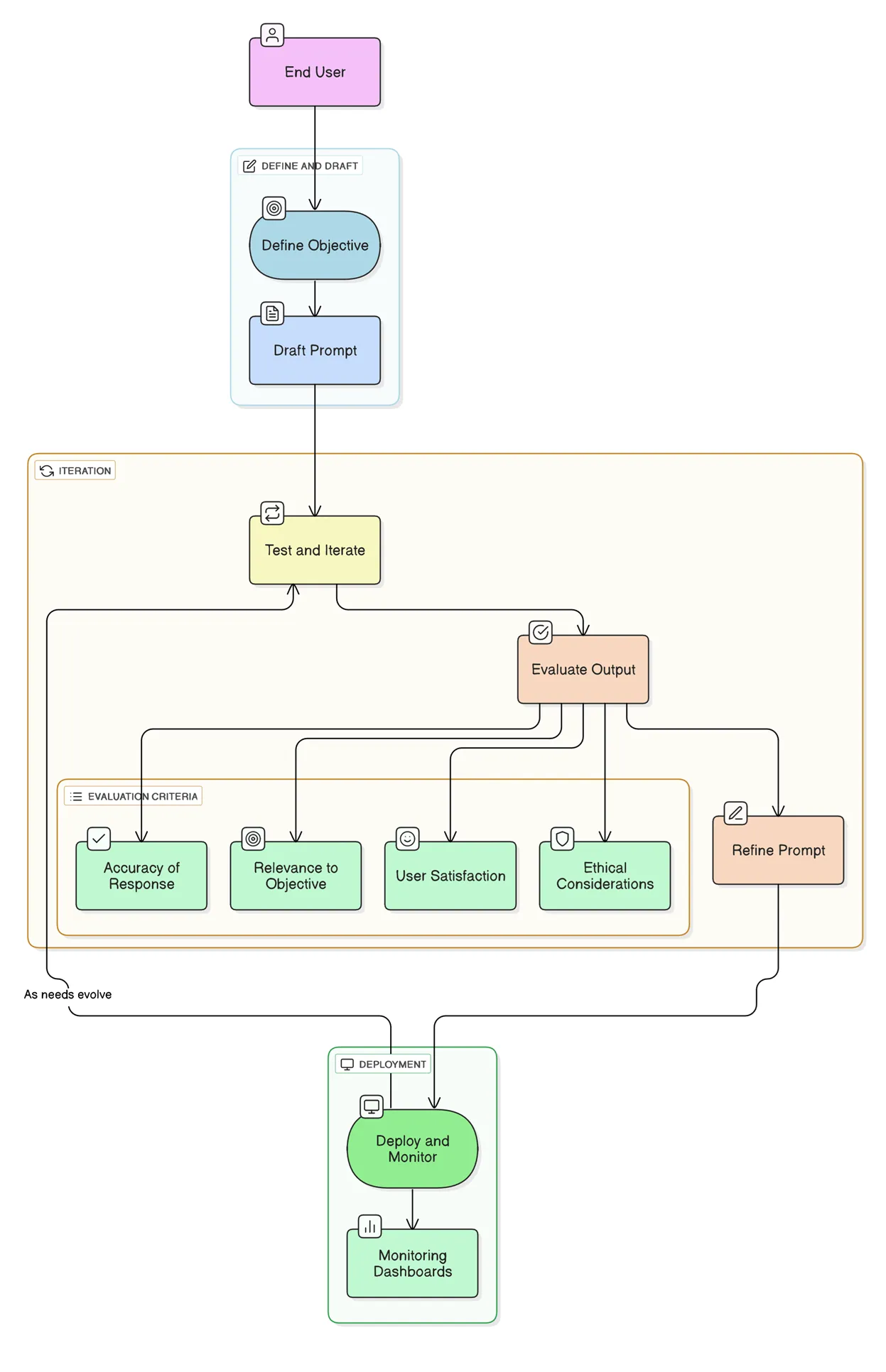

5. Visualizing the Prompt Lifecycle

A simplified diagram (not included here) illustrates the typical prompt engineering lifecycle:

- Define target objective

- Create initial prompt

- Generate model response

- Evaluate (accuracy, relevance, ethics, etc.)

- Refine prompt

- Repeat evaluation

- Cycle continues as needs and models evolve

6. Conclusion

In 2025, prompt engineering is no longer optional—it’s an essential bridge between human goals and machine intelligence. Shaping your prompts well helps you succeed in writing code, creating art, or asking the right questions.

Learning important techniques, trying out different technology, and keeping ethics in mind allow both developers and hobbyists to put AI to more meaningful use.