Artificial Intelligence has quickly moved from sci-fi territory to a daily tool for creative professionals. Writers use GPT to brainstorm scenes. Designers prompt tools like Midjourney for digital art. Entrepreneurs spin up content-driven side hustles in minutes. But amid the convenience and creativity lies a question that’s harder to automate: Who actually owns what—and what’s legally and ethically fair to monetize?

This guide is for the curious and the cautious alike—creators experimenting with AI, professionals adapting to new tools, and anyone wondering where the guardrails are (or should be).

1. Who Owns AI-Generated Content?

The simple version: in most jurisdictions, you can't copyright something made solely by a machine. In the U.S., for instance, copyright law protects "original works of authorship" created by humans[1]. That means if a piece of content is entirely machine-generated—say, an AI image created from a single-word prompt—it likely isn’t protected by copyright law.

However, if you guide the output meaningfully—curating results, editing, combining with original writing or media—you may be able to claim ownership of the final result through your human contribution[2].

This was tested in 2023, when the U.S. Copyright Office granted limited protection to a comic book where the story and layout were human-made, but the illustrations were created using Midjourney. The images themselves were deemed uncopyrightable, but the selection and arrangement of those images was protected[3].

Practical tip: If you’re using AI in your workflow, think like a creative director, not just a user. Keep records of your edits, revisions, or prompt iterations. They could help establish authorship later.

2. How Much Human Input Is “Enough”?

Many wonder: If I tweak the output a little, does that make it mine? Unfortunately, there's no fixed percentage or formula. Courts tend to look for substantive, creative input that reflects human decision-making.

Consider a YouTube script partially written by GPT. If you edit it heavily, infuse your voice, and structure it for clarity and engagement—that reflects authorship. But if you copy-paste verbatim AI output, you may not hold enforceable rights, especially if someone else uses a similar prompt and gets similar text.

Interesting angle: Some artists now specialize in “prompt engineering”, crafting detailed, stylized prompts as a new form of creative authorship. While prompts themselves may not be copyrightable, their results—when thoughtfully combined and curated—may reflect original work[4].

3. Can Someone Else Use the Same Prompt or Output as Me?

Yes—and it’s happening more often. Since AI models don’t guarantee unique results, two users can end up with nearly identical images, stories, or scripts. And unless you add significant human edits, you may not have legal grounds to stop them.

This has implications for SEO writers, visual artists, and businesses relying on AI-generated marketing assets. Many find that building a recognizable brand voice or unique post-editing process helps avoid the “generic AI sameness” trap—and protects their work from easy imitation.

4. Could I Be Liable for What My AI Writes or Creates?

Short answer: yes. Most AI tools—including GPT-based platforms—include terms that place full legal responsibility on the user. That means if your AI-generated blog post unintentionally replicates copyrighted text or a private individual's likeness, you could be liable[5].

Case in point: In 2023, an AI-generated song mimicking Drake’s voice went viral—and was quickly pulled after the label cited right-of-publicity and copyright claims[6]. While the creator may have intended it as satire or fan art, the lack of consent and commercial scale made it legally vulnerable.

Practical advice: Always review outputs for potential infringements, especially in sensitive or commercial contexts. Some creators use plagiarism detectors, reverse image search tools, or hire editors to vet content before publishing.

5. AI and Fair Use: Where’s the Line?

Fair use is a legal doctrine allowing limited use of copyrighted material without permission—for purposes like criticism, news reporting, or parody. Many AI tools are trained on massive datasets scraped from the internet, which likely include copyrighted works.

The legality of using these works as training data is still under debate. But for creators, the more pressing question is: If I use AI that draws on that training, am I responsible for what it outputs?

The current view among courts is evolving. Some judges have suggested that using copyrighted material to “transform” it into something new—such as through generative AI—might be permissible[7]. But relying on this without human input or transformation could be risky.

Safer ground: When using AI-generated content, modify it, contextualize it, or add commentary. If it resembles something well-known, ask: is this a new message or purpose? If not, it might not be fair use.

6. What About Images, Music, and Voices?

AI tools that generate visuals, songs, or voices open new possibilities—and new legal gray zones. One major concern: right of publicity, which protects people from unauthorized commercial use of their name, likeness, or voice.

Example: If you use an AI voice model to imitate Morgan Freeman for a podcast ad, even as a joke, you could be violating his right of publicity—even if the model was “trained from scratch.” Some U.S. states like California and Tennessee have strong protections here[8].

Similarly, platforms like Shutterstock and Adobe Stock are beginning to restrict uploads of AI art generated using models trained on copyrighted images without permission. This signals a shift toward more regulated ecosystems for creative assets.

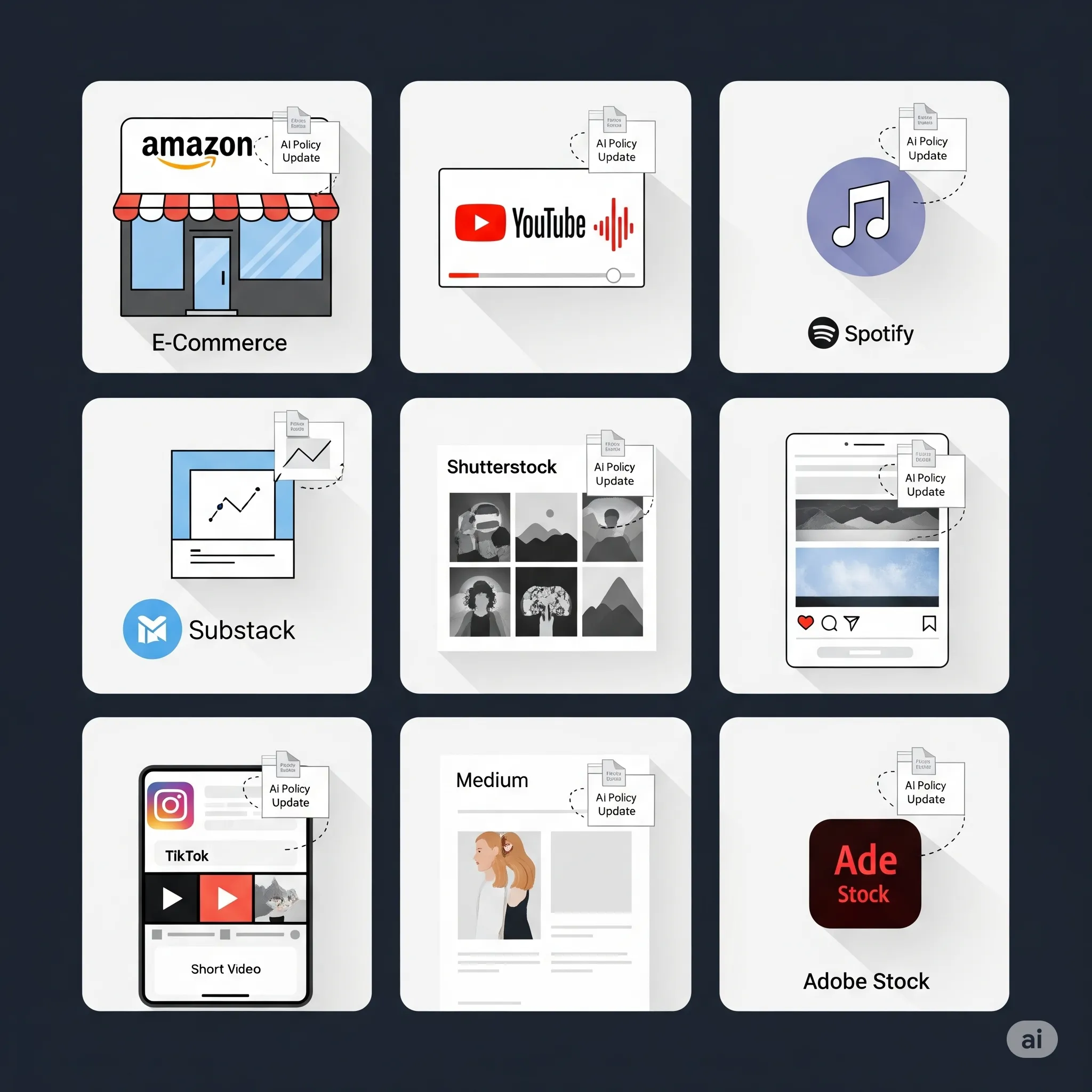

7. How Are Platforms Handling AI Content?

Major platforms are starting to define their positions more clearly:

-

Amazon KDP now requires authors to disclose whether a book was generated with AI—even partially[9].

-

YouTube has begun testing disclosures for AI-assisted content and may add metadata flags for transparency.

-

Spotify removed several AI-cloned artist songs after complaints from record labels, raising questions about genre mimicry and ownership[10].

For creators, this means monetizing AI content isn’t just a legal question—it’s also about aligning with the evolving terms of each platform.

8. Real Cases: What Success (and Trouble) Looks Like

❯ Win: The AI-Enhanced Author

A self-published romance author used GPT to co-develop plot twists and dialogue, then revised the entire manuscript with her editor. She retained clear authorship, disclosed AI assistance on Amazon, and saw a 30% boost in productivity—with no legal friction.

❯ Warning: The Copycat Blog Collapse

A content marketer launched dozens of AI-generated niche blogs with minimal oversight. Within weeks, search engines demoted the sites for duplication, and one post triggered a DMCA takedown. The model had regurgitated portions of a travel article—almost verbatim.

9. So… Is It Worth Using AI to Make Money?

Yes—but with eyes wide open. Creators who view AI as a collaborator, not a shortcut tend to build more resilient and legally safe businesses. They stay involved. They shape outputs. They build systems of trust—whether through editing, attribution, or transparency.

In this fast-moving space, it's worth asking not just what you can do with AI, but what you should. Ethical, transparent, and human-informed use isn’t just legally safer. It’s what helps you stand out in a world where machines can mimic, but not yet create meaning on their own.

References

-

U.S. Copyright Office, Compendium of U.S. Copyright Office Practices, § 306 (2022).

-

Berkic, J., “Copyright and AI: Human Authorship in Machine-Generated Works,” Harvard Journal of Law & Technology, 2023.

-

U.S. Copyright Office, “Zarya of the Dawn” Decision (2023).

-

Devlin, J., “Prompt Engineers and the Rise of AI-Directed Creativity,” MIT Tech Review, 2024.

-

OpenAI Terms of Use, 2024.

-

The Verge, “AI Drake Song Pulled from Spotify After Legal Pushback,” 2023.

-

Authors Guild v. Google, 804 F.3d 202 (2d Cir. 2015).

-

Right of Publicity State Law Survey, Davis Wright Tremaine LLP, 2024.

-

Amazon KDP Help, “AI Content Guidelines,” Updated 2024.

-

Billboard, “Spotify Removes AI Tracks Mimicking Famous Artists,” 2023.